Notice: This Wiki is now read only and edits are no longer possible. Please see: https://gitlab.eclipse.org/eclipsefdn/helpdesk/-/wikis/Wiki-shutdown-plan for the plan.

SMILA/Documentation/Pipelets

This page describes pipelines, pipelets and their lifecycle.

Concepts

A Pipeline is a synchronous workflow composed of components called pipelets that processes a list of records given as the input. Synchronous means that the invoker of the pipeline blocks until the execution has finished, and a set of result records is returned that represents the result of the processing (if successful).

Often the list of input records consists of a single record representing a user request and the workflow executes a strict sequence of pipelets to produce a single result record. But it's also possible (especially when a pipeline is used as part of an asynchronous workflow) to send more than one record through the pipeline in one call to reduce the overhead of the pipeline invocation. Finally, more sophisticated pipelines can contain conditions and loops or they can change the number of records going through the pipeline.

A pipelet is a POJO that implements the interface org.eclipse.smila.processing.Pipelet. Its lifecycle and configuration is managed by the workflow engine. An instance of a pipelet is not shared by multiple pipelines, even multiple occurrences of a pipelet in the same pipeline do not share the same instance. However, an instance may still be executed by multiple threads at the same time, for example if the same pipeline is executed in parallel, so a pipelet implementation must be thread-safe. The configuration of each pipelet instance is included in the pipeline description. Technical details on pipelet development can be found in How to write a Pipelet.

The default SMILA workflow processing engine uses BPEL to describe pipelines. But the pipelets do not depend on being called from a BPEL context, so it would be easily possible to replace the BPEL engine by a pipeline engine using a different description language and continue to use the same pipelet implementations.

Lifecycle

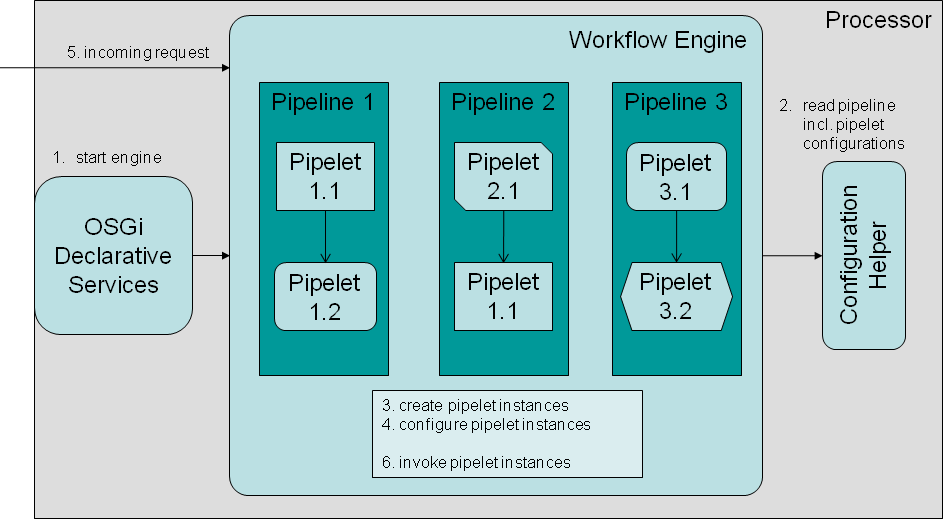

The following diagram shows the lifecycle of pipelets.

A pipelet instance lives inside the workflow engine. It is declared in the providing bundle by putting a JSON file containing a pipelet description in the SMILA-INF directory of the bundle (see How to write a pipelet? for details). When the engine starts, it reads predefined pipeline definitions (i.e. BPEL workflows) from the configuration directory The pipelines are introspected for pipelet invocations and the pipelet configurations that are contained in the pipeline. For each such occurrence it creates an instance of the specified pipelet class and injects the configuration into the pipelet instance. The pipelet instance is stored in the workflow engine as long as the engine is not stopped (and as long as the bundle providing the pipelet is available, of course). So one single pipelet instance exists for each occurrence of a pipelet in a pipeline. The pipelet must be capable of parallel execution because each execution of a pipeline uses the same pipelet instances.

Runtime Parameters

We have introduced a convention that records can have a map element in attribute _parameters and that the elements of this map should be interpreted by pipelets as overrides for their configuration properties. The class org.eclipse.smila.processing.parameters.ParameterAccessor provides helper methods for checking if the record has such "runtime parameters" set and getting them from there, or from the pipelet configuration, if not overridden. However, the accessor can easily be told to look in another attribute, if necessary, or to even use the top-level attributes of the records for parameters. The latter is used by org.eclipse.smila.search.api.helper.QueryParameterAccessor, because in search processing the convention is that query parameters are at top-level of the request record.